Improving Test Execution Efficiency with Test Impact Analysis

Regression testing of changes is usually done by re-running all automated or manual tests of a system (retest all). As more and more features are added to a software system over its lifetime, the number and overall runtime of tests also increases. With increasing runtime of the testsuite the number of possible retest all runs decreases. Therefore, the time between introducing a new bug with a change and getting the results of a failed regression test increases as well. However, re-executing all tests is not only expensive, it is also inefficient, since most of the tests in the test suite don't test a given change to the codebase.

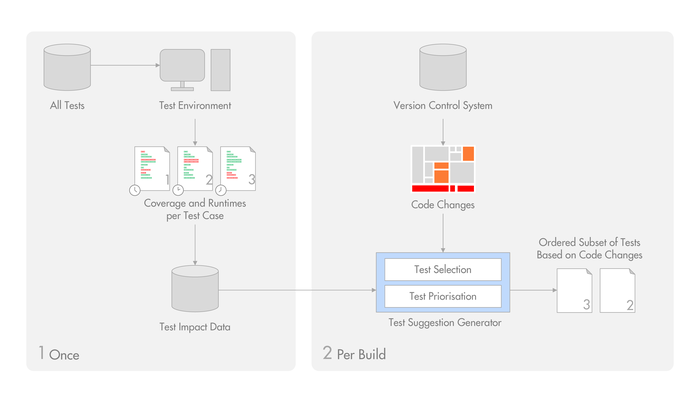

Test Impact Analysis provides an automated mechanism to select and prioritize tests to be executed based on the coverage of previous test runs. For that purpose Testwise Coverage must be recorded and uploaded to Teamscale. In follow-up runs the Test Runner will ask Teamscale for the tests that need to be executed to test the changes of the current commit to be tested. Teamscale uses the data from the initial run to determine, which of the tests will actually execute the code changes. These impacted tests are then prioritized such that tests with a higher probability of failing are executed before the other tests. The Test Runner can then start executing tests based on the test list.

On Impacted Tests

Please note that the selection of impacted tests is not guaranteed to include all tests that may fail because of a code change. In particular, changes to resource or configuration files that influence the test's execution but are not tracked as coverage are not taken into consideration. It's therefore recommended to execute all tests in regular intervals to catch the impact of these changes as well.

Setting up the Test Impact Analysis

- For Java, please refer to our TIA Java tutorial.

- For other languages and technologies, our how-to for uploading Testwise Coverage is a good starting point.

Determining tests covering a method

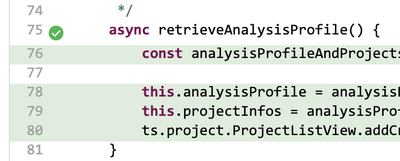

After the initial Testwise Coverage upload the Code view will show an indicator per method if the method has been covered:

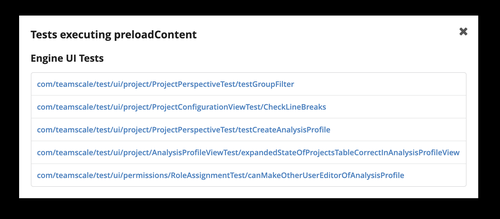

A click on the indicator opens a dialog box, which presents a list of all tests that executed the selected method:

Viewing detailed test information

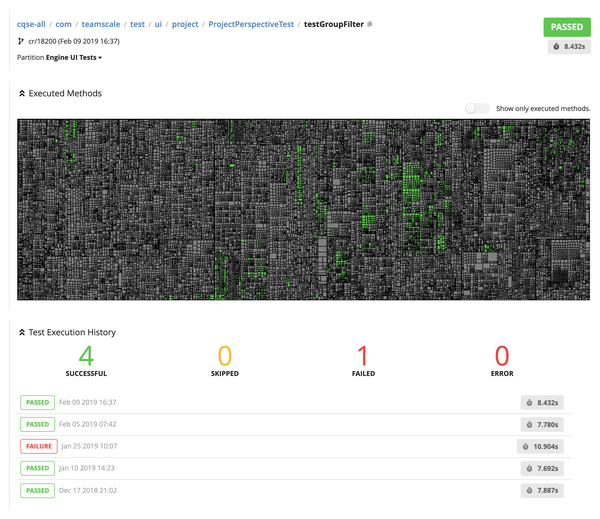

A single test can either be inspected by following the links in the dialog box or by selecting the test from the Test Gaps perspective in the section Test executions . The detail view depicted below shows the most recent test execution result at the top. The treemap below shows coverage of this single test on the whole codebase. By using the Show only executed methods option, the treemap can be focused to only show the executed code regions. At the bottom the history of the 10 previous test executions are shown with the corresponding durations and test results. A click on one of the previous test executions opens the test details view for this execution e.g., to inspect the error message of a previous failure.

Determining impacted tests over large time intervals

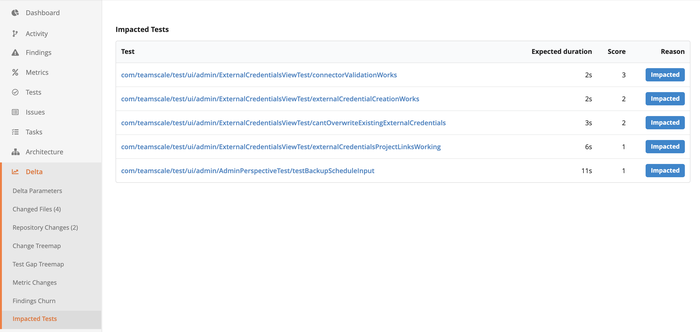

In the Delta perspective the list of impacted tests can be inspected by either selecting a time range or a merge scenario. A sample result is shown here:

The list will show all tests that are impacted by the selected changes. A prerequisite for this is that a Testwise Coverage report has been uploaded for a timestamp that lies before the end of the inspected timeframe.

The impacted tests shown in the delta perspective can be used to verify that everything is set up correctly on the server side. It can also be used as a utility to support manual test selection.