Improving Code Quality

Teamscale can do much more than the average static code analyzer. Here is a quick overview:

Improving Code Quality using Quality Goals

Teamscale's default configuration offers you commonly used and actionable metrics with respect to

- the structure of your code (file sizes, method lengths and nesting depths),

- redundancy caused by copy & paste programming,

- documentation in term of source code comments,

- violations of coding conventions, and

- bug patterns (e.g., derived from data flow analysis)

But it can be also easily extended using custom checks specifically tailored for your needs.

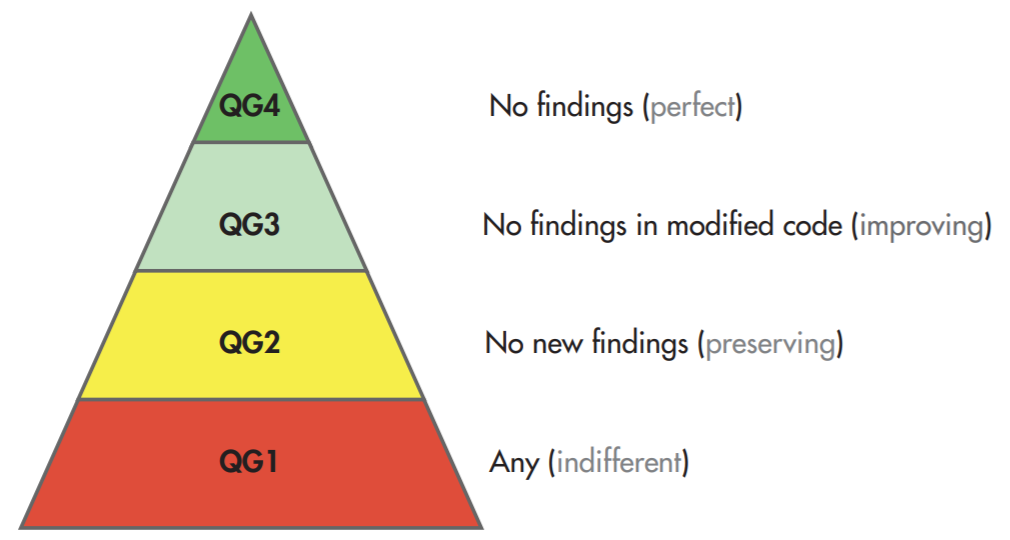

After choosing appropriate metrics, improving code quality requires a realistic goal, a quality goal. Otherwise, your developers are more frustrated than before. Depending on your project's history, the goal can vary. If you just started to develop a new system on the green-field, the overall goal of zero findings might still be feasible. However, for grown-software, we strongly recommend to accept the current quality status as it is and just start from there. Either choose the goal to keep the status quo and at least not make it worse (i. e. not introducing new findings). Or take it one step further and try to improve code that you are working on anyway (i. e. remove findings in code that is currently modified due to a feature change request).

Quality Goals

Teamscale helps you to reinforce your quality goal. As many existing tools operate in batch mode, they analyze the entire system at once. While this is lengthy in time, it also omits the ability to provide accurate information which finding is new or in modified code. Teamscale, however, analyzes every single commit to your repository [1]. Hence, it knows exactly when a specific finding was newly introduced to the system. Consequently, Teamscale can directly reveal violations of your quality goal, for example, if a developer just created a new finding or if a developer missed the chance to remove a finding in code you touched recently.

Why Fast Feedback is Essential

Most importantly, developers do not have to wait a day but can fix the problems immediately while they are still working on the subject: Teamscale provides the developer feedback within seconds after a commit. It specifically informs the developer about the impact of his change with respect to quality. Even personal notification services can be configured. Personal feedback is crucial to reduce the information flood and to focus on the essentials. It helps to focus only on new problems regardless of the past and to visualize small first steps of success.

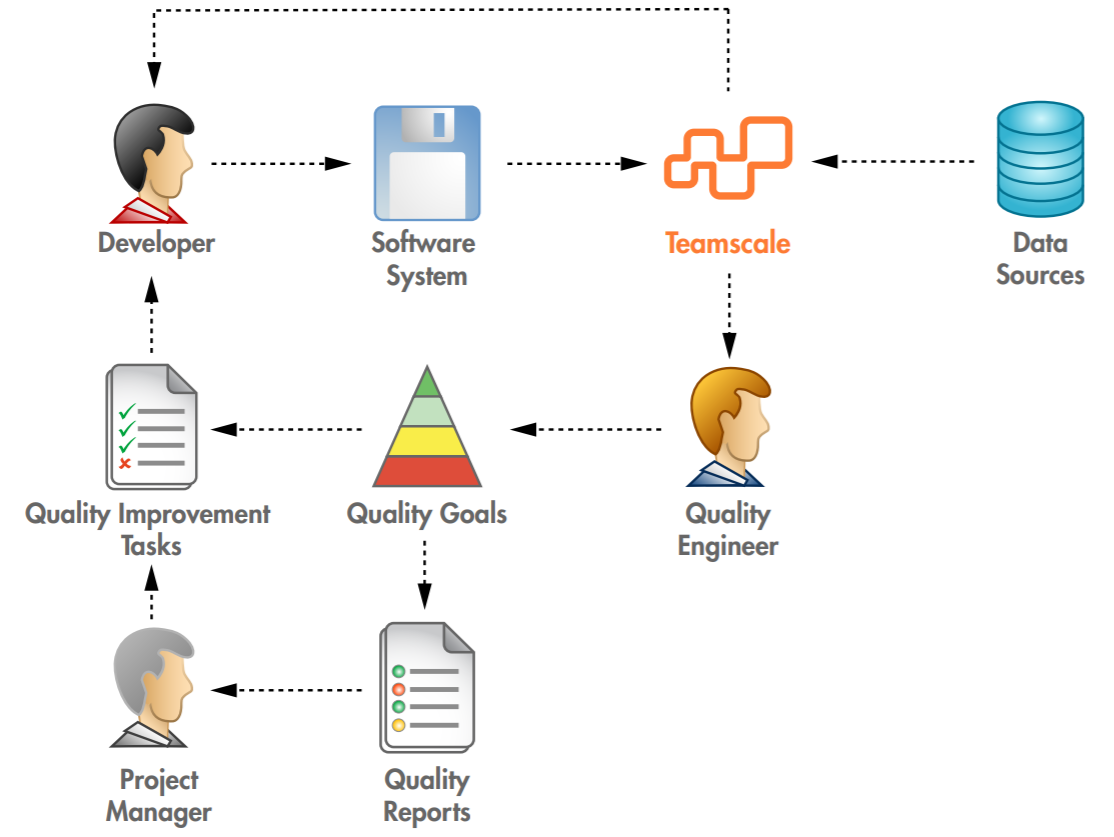

In almost all software projects, feature pressure and time constraints are dominating every-day decisions. As a response, many teams have created the role of a quality engineer to ensure that quality improvements do not fall off the table. Teamscale also addresses the quality engineer's need to monitor the quality trend, to understand outliers of a specific metric and to report to management. Improving quality without management support is like Icarus striving for the sun. Hence, when management is providing resources, creating transparency about its usage is beneficial for both sides. Often, a clearly defined quality control process as in this example:

Code Quality Control Process

Such a process increases satisfaction of all stakeholders and leads to long-term success [1].

In this process, software intelligence creates transparency and positive quality-awareness. It supports the developer in his daily work and makes the project manager aware of the current quality status and the usage of provided resources. Based on this level of information, he can steer his project more confidently without risking that feature pressure imposes uncontrollable impact on the code quality.

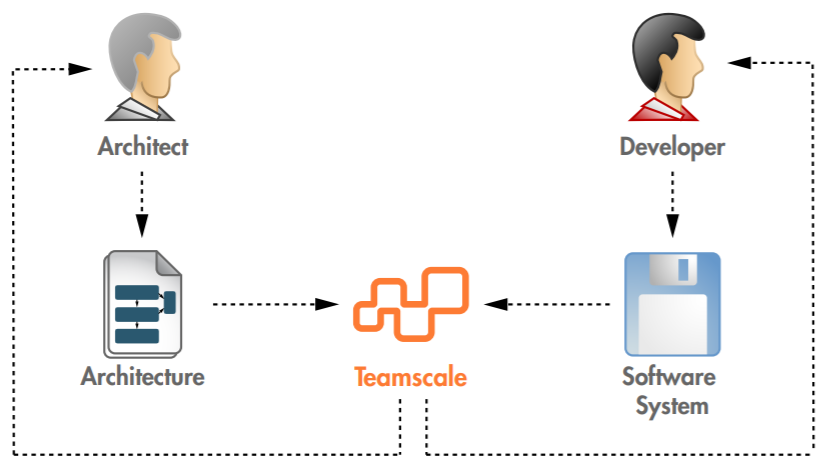

Managing Architecture Quality

In addition to code and test quality, Teamscale also helps to manage your architecture quality. Many teams have at least an informal understanding what the architecture of their system - in terms of component diagrams - roughly looks like. But only few have it documented and even fewer have a conforming code base.

Teamscale helps you first to model the architecture. With an easy graphical user interface, you can specify components and wanted dependencies. In a second step, Teamscale continuously checks whether your current code base conforms to your specifications. It will notify the developer of unwanted dependencies immediately after a commit. After inspection, it is then up to the developer to clean up the code (if the dependency is, in fact, unwanted) or to adapt your specification (if you can live with the new dependency):

Architecture Quality Control Process

Teamscale supports you to avoid a commonly seen loss of architecture knowledge [2] and to keep your implementation conform to the intended architecture.

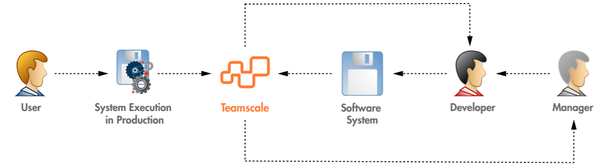

Analyzing Feature Usage

During any quality assurance process, managers and developers prefer focusing on those parts of the system that users often use. Nobody wants to waste money on code that is not even executed by the user. Even though this code has already been written, it still creates overhead costs for maintenance that should not be underestimated [3].

On the one hand, Teamscale integrates the information from a usage profiler in the system's production environment. On the other hand, it analyzes the development process. Combining both information sources provides valuable input for managing the costs of software maintenance. It supports developers and managers in their decision to delete code that is never executed. It also helps them to make sure only those features are extended that are in fact used.

Analysis Process of Feature Usage

Further Reading:

- Continuous Software Quality Control in Practice

D. Steidl, F. Deissenboeck, M. Poehlmann, R. Heinke, and B. Uhink-Mergenthaler, In: Proceedings of the IEEE International Conference on Software Maintenance and Evolution (ICSME). 2014 - The loss of architectural knowledge during system evolution: An industrial case study

M. Feilkas, D. Ratiu, and E. Jürgens, In: Proceedings of the 17th IEEE International Conference on Program Comprehension (ICPC’09). 2009 - How much does unused code matter for maintenance?

S. Eder, M. Junker, E. Jürgens, B. Hauptmann, R. Vaas, and K.-H. Prommer, In: Proceedings of the 34th International Conference on Software Engineering (ICSE 12). 2012