Tests View

The Tests view displays all tests that are known to Teamscale. Tests can be added to Teamscale by Uploading Test Execution reports in one of the supported formats and by extracting tests directly from the actual source code.

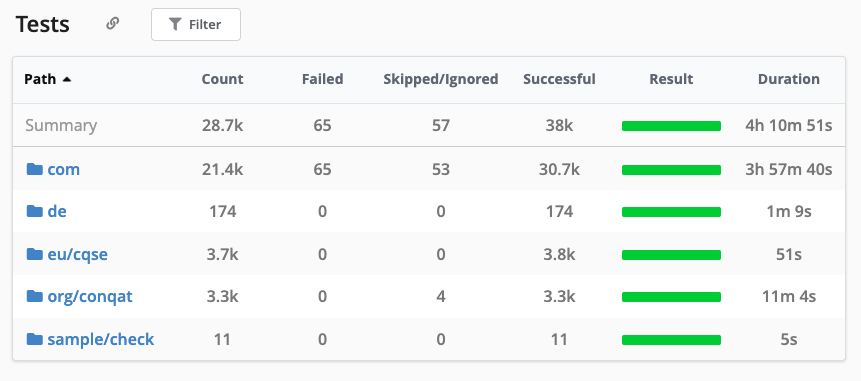

Hierarchical view

The tests are displayed in a hierarchical structure based on the path of the test.

Actions

This view contains two actions:

- Link The button adds a link to this view to the clipboard.

- Filter The button switches to the test filter view.

Metrics

The available metrics are:

- Count: The count of tests in the container

- Failed: The count of tests that have failed in the latest test run

- Skipped/Ignored: The count of tests that have been skipped (i.e. because they are not compatible with the execution platform) or ignored (i.e. the test is disabled in the code or test configuration)

- Successful: The count of tests that have passed in the latest test run

- Result: The aggregated assessment of the previously listed test execution results

- Duration: The aggregated test execution duration

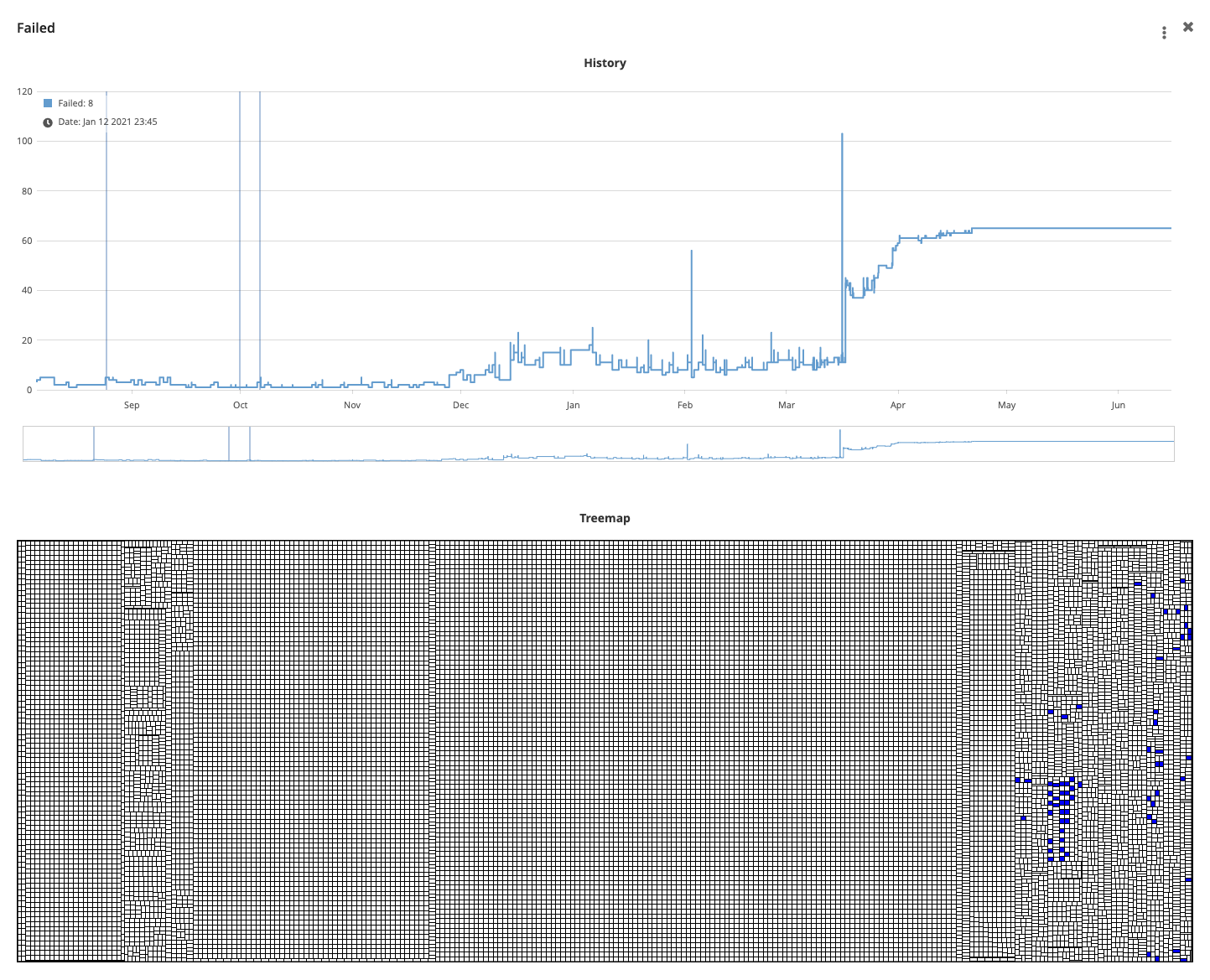

You can click on any of the metrics to see a trend of the metric over time and a treemap of the included elements that shows which of the elements is affected by the metric.

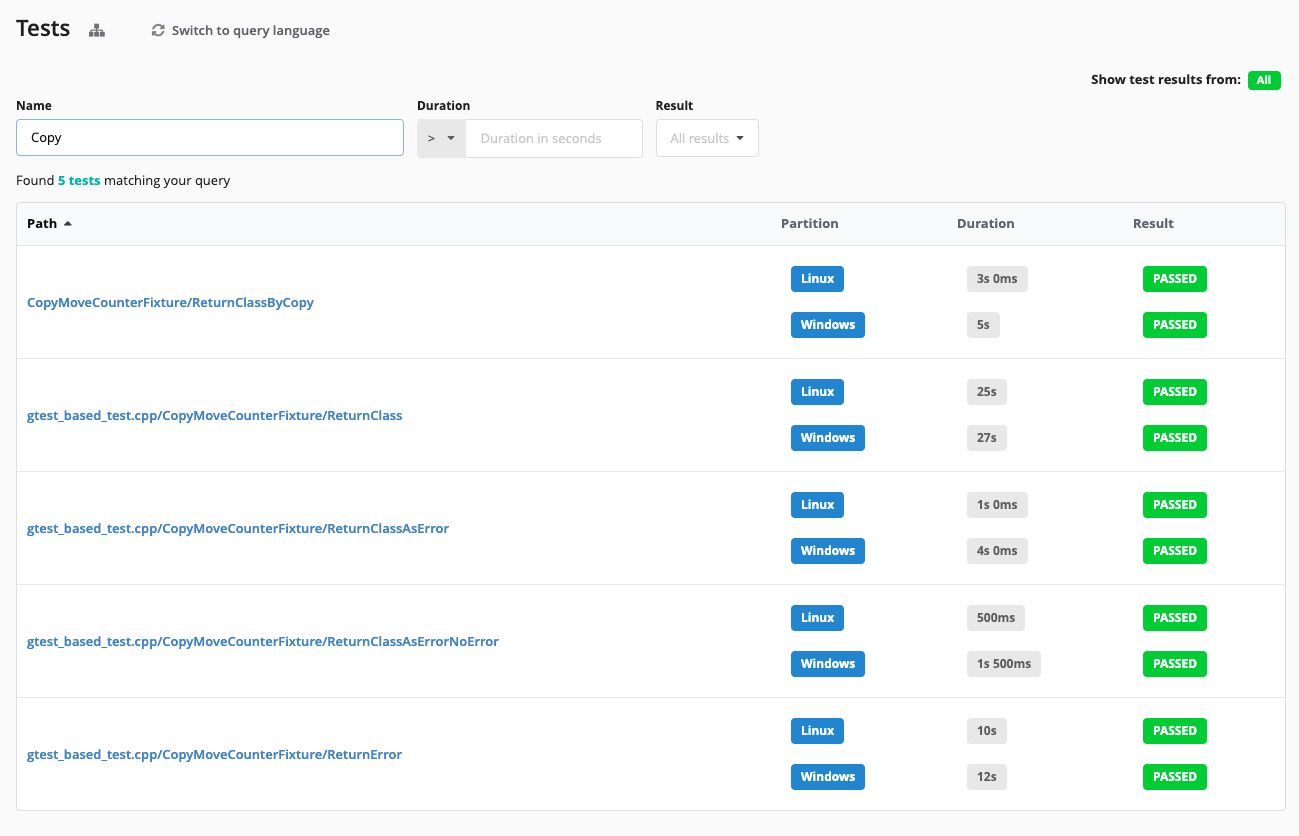

Test Filter View

The filter view allows to filter tests based on certain criteria.

Actions

This view contains two actions:

- Hierarchy The button brings you back to the hierarchical view on the tests

- Switch to query language The button switches the view from the basic mode to the query language mode. The query language gives you much more power over which tests should be selected.

Basic Mode

- Name: Allows to specify a regular expression that is matched against the test names

- Duration: Allows to specify a threshold for the upper or lower bound of test executions Note that this always takes the maximum duration of all partitions. If you need more fine grained control over how partitions are treated, switch to the query language mode.

- Result: Allows to specify which results should should be included in the results

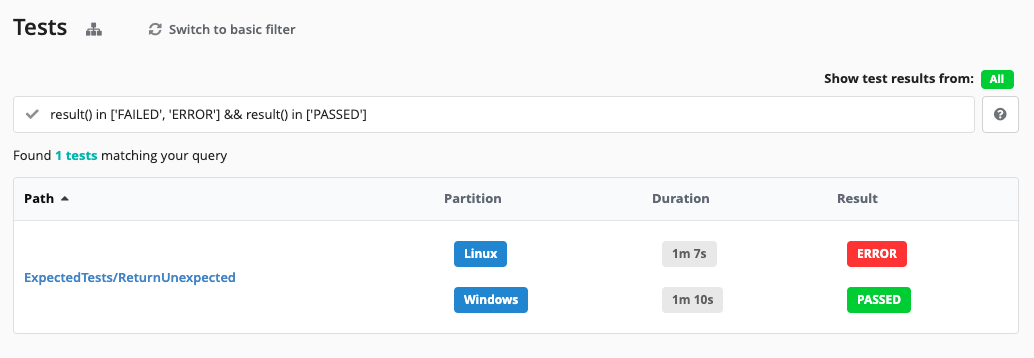

Query Language Mode

For example, to display all tests that fail on one platform, but pass on another one, enter result() in [failed, error] && result() in [passed] into the query box. After pressing Enter, the list of matching tests is displayed below the box. The input box also provides autocompletion to simplify writing queries.

Each test in the returned list provides a link to additional details, the partition, duration and result of their execution. The partition selector in the top right can be used to hide the durations and results of partitions you are currently not interested in. If you want to remove tests from certain partitions from the results entirely, you need to filter them out via the query. For example partitions !in [Windows] shows only the tests that have no execution information for the Windows partition.

Test Query Language

| Attributes | For each test, the attributes name, partitions, codeLocation, and executionUnit are defined. |

| Comparison Operators | Attributes can be compared to values using the usual comparison operators =, !=, >, <, >= and <=. The order is always attribute, operator, value. You can not compare attributes to one another. |

| Set Query | You can use the keyword in together with square brackets to create set queries. An example would be result() in [passed, skipped] |

| Logical Operators | Comparisons can be connected using the logical operators && (and), || (or), and ! (not). Additionally, parentheses can be used to group subexpressions. |

| Like Operator | The like operator ~ can be used to match a value against a regular expression. |

| query | The special function query can be used to reference stored queries. For example query('Failed Tests') can be used in a query to reference a query named 'Failed Tests'. The stored query string is inlined into the present query. For example, if 'Failed Tests' corresponds to type = test and status = 'failed', a query query('Failed Tests') and name ~ 'End.*' is expanded to (type = test and status = 'failed') and name ~ 'End.*'. |

| State Queries | The operator inState allows to formulate that an issue has to be in a given state for a longer time. The operator can contain any subquery and is followed by a comparison (>, <, >=, <=) operator with a time value. An example would be inState(result() in [failed, error]) > 14d to display all tests that have been failing for at least 14 days. The number can be followed by suffixes for days (d), hours (h), minutes (m), seconds (s) and milliseconds (ms). The default when no suffix is given is days. Dates can also be used, for example: inState(!(result() in [failed, error])) > 2020-1-12 returns all tests, which have not failed since 2020-1-12. Additionally, the special value @startOfMonth can be used to get the duration of time elapsed since the start of the current month, e.g. inState(closed=false) < @startOfMonth to display all issues closed since the start of the current month. |

| result/duration functions | The result and duration functions allow to query the test execution result and test duration of a test. Without any parameters the function returns a list of the values from all partitions. Passing one or multiple values as arguments filters the values to the matching partitions. For example result('Unit Tests', 'Integration Tests') in [error] selects all tests that failed in either the Unit Test or Integration Test partition. max(duration('Some .* pattern')) > 30s selects all tests that have a runtime of more then 30s in one of the matching partitions. |

| Aggregation functions | The size, min, max functions allow to aggregate a list values to a single value that can be compared against a static value. For example size(partitions) = 0 lists all test that have no execution information yet or max(duration()) > 1m all tests with a duration of more than 1 minute. |

Some interesting use cases could be:

- Tests that fail in at least one partition (track failing tests)

result() in [failed, error]- Tests that fail in one partition and pass in another (track inconsistent test results)

result() in [failed, error] && result() in [passed]- Test that have been failing for longer than 14 days Failing tests are fine, actually that’s what they are made for, however, they should be fixed soon

inState(result() in [failed, error]) > 14d- Tests that have a duration > X sec. (track long running tests)

max(duration()) > 30sor for a specific partition

max(duration('Unit Tests')) > 10s- Tests that have never failed (track candidates of “useless” tests)

inState(result() !in [failed, error]) > 1970-01-01Test Query Metrics

You can store a query as a metric with the Add as metric button. It will then show up in the right sidebar. Stored test query metrics can then be referenced from other places like the metric-related dashboard widgets.