Test Gap Analysis

This article describes the idea and concept of the Test Gap analysis in Teamscale and provides insights into some technical details.

Goal of Test Gap Analysis

Testing is an integral part of a software product’s life-cycle. Some people prefer executing their tests continuously while they develop, others have a separate testing phase before each release. The goal, however, is always the same: finding bugs. The more, the better.

Research has shown that untested changes are five times more likely to contain errors than other parts of the system (“Did We Test Our Changes?”, Eder et. al., 2013). It is therefore a good idea to ensure that all changes in your code base are tested at least once. This is true whether you do selective manual tests or execute your whole test suite automatically every night.

Do you really know what your tests do? Which parts of the system they touch? And do you know exactly what has changed in your system since the last release? Every class, every method that was modified in some way?

Teamscale's Test Gap analysis answers this question. The analysis points out untested changes for a given timeframe, for instance, the time since the last release. Usually, this code should receive some more attention before it is released.

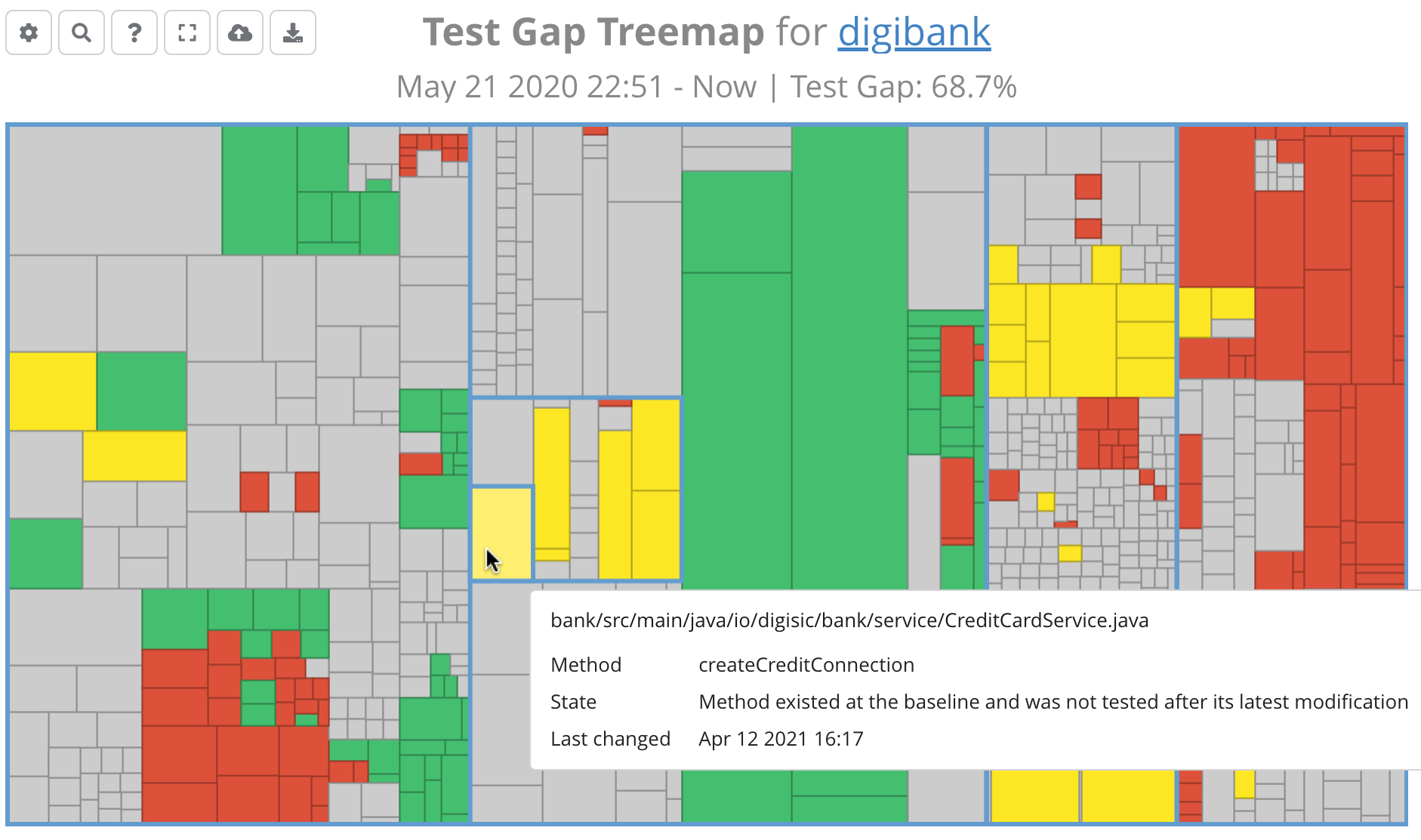

Visualization of Test Gaps in Teamscale

The main tool for displaying test gaps in Teamscale are treemaps.

You can find an in-depth tutorial on how to interpret these treemaps in Reading Test Gap Treemaps.

Technical Details

In this section, we describe some technical details of Teamscale's Test Gap analysis.

Focus on Method Granularity

The Test Gap analysis in Teamscale works exclusively on method granularity. The analysis considers a method as covered if (and only if) a coverage report marks one or more lines in the method as covered.

In theory, we could base the Test Gap analysis on a more fine-grained coverage granularity (e.g., line-based). However, using coverable lines as basic entity for the Test Gap analysis would make the concept harder to grasp than using methods.

Also, line-based granularity would cause a significant impact on the analysis complexity (run time and memory usage). In our experience, the increased precision does not justify the higher cost. Usually, the method-level granularity analysis yields a clear picture of which code regions have been neglected in testing.

Supplying Coverage Reports to Teamscale

The Test Gap analysis is based on code coverage reports that are generated by external profiling tools. Teamscale does not execute the analyzed code and can therefore not decide which code is executed by tests.

We support a vast array of coverage-profiling tools for different languages. In our guide to profiling you can find the right tool for your technologies.

Coverage Processing in Project History

When Teamscale processes a coverage report, it creates an artificial commit in the project history that applies this coverage to the code. Teamscale does not load this commit to the version-control system (git, svn, ...). The artificial commit exists only internally in Teamscale.

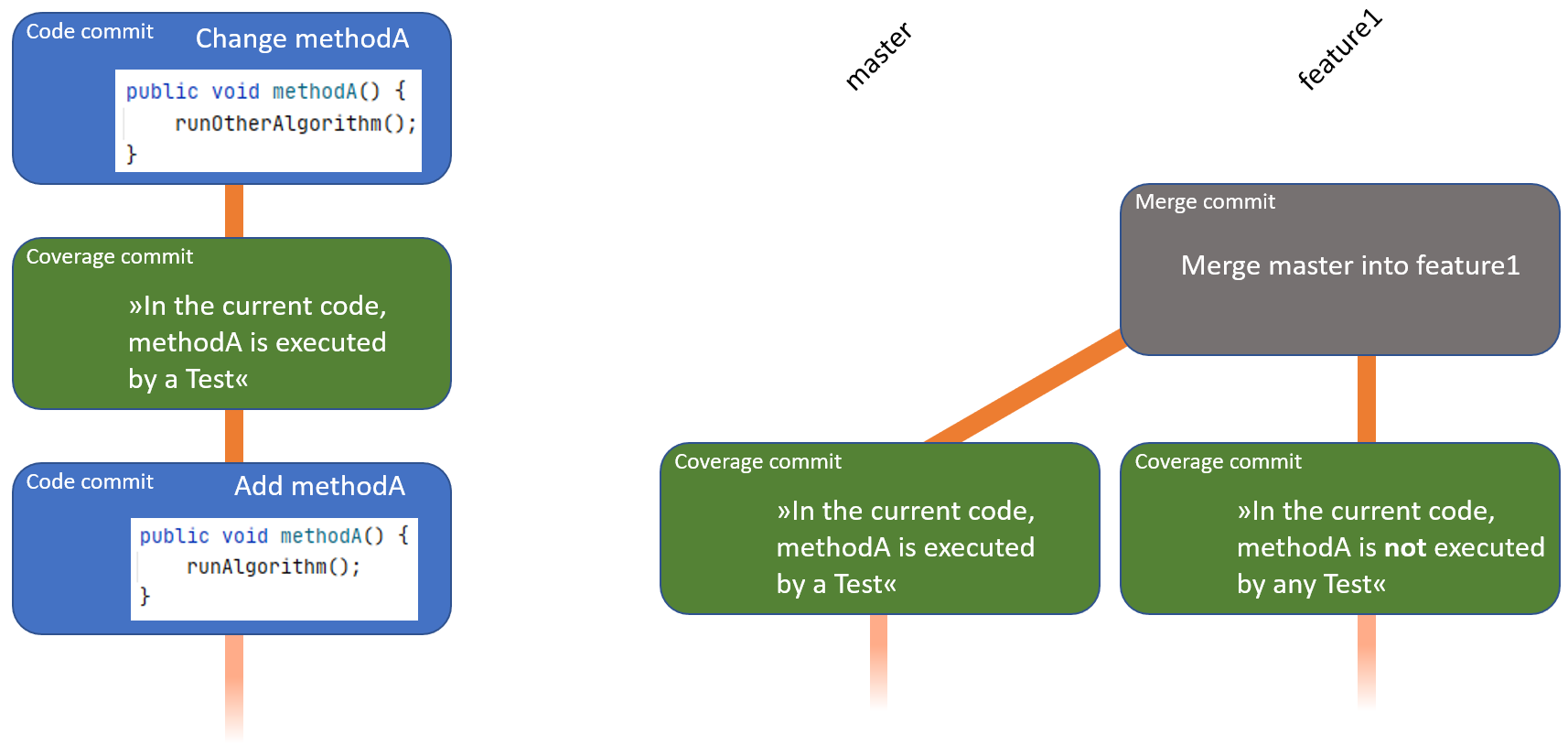

The left side of the following illustration shows a scenario where we upload coverage for a method and change the method afterwards. The right side shows a merge scenario.

If a coverage commit reports that a method is covered at a given branch/timestamp, we consider this method as covered until its next change. In the scenario on the left side, this means that the method is covered between the coverage commit and the second code commit. Since the second code commit changes the method, it will be marked as untested until new coverage for the method is uploaded.

In the scenario on the right side, a method is changed and covered on the master branch. With the merge, the method changes from master are applied to the feature branch. Thus, the method is considered as changed after the merge. The coverage from master is merged to the feature branch if (and only if) the method has not been changed with respect to its predecessor on master, i.e., the test coverage from master is still considered as valid after the merge.